Learn how to integrate OpenAI with the Xweather MCP server for real-time forecasts and decisions.

You’ve likely seen examples of AI agents fetching weather data in tutorials and demos across the web. It’s one of the most common use cases, not only because it’s particularly easy to understand and familiar for a user, but because it perfectly fits what an AI is meant to help with. In an industry where AI is the hammer and there are seemingly an infinite number of nails, the Xweather's MCP server stands out as the golden nail among the bunch.

If you want to build agents that respond to changing weather conditions, you need more than a standard API call. Though the Xweather API excels at providing many unique datasets and boasts some of the best update times across all weather APIs, it can be supercharged by connecting it to a Large Language Model (LLM). That way, the LLM can always retrieve the most up-to-date information.

Model Context Protocol (MCP) defines a standard for giving AI systems access to external data that the LLM models otherwise do not have. Traditionally, models such as GPT from OpenAI or Sonnet from Anthropic can only access data from pretrained models up until a certain date, making it impossible to retrieve live information. In recent updates, models can now perform web searches, but the results are nearly always unpredictable and are rarely consistent with sources or provide structured results. MCP is the tool that bridges that gap. The Xweather’s MCP gives you access to live forecasts, severe weather alerts, and historical records, and combines that with the capabilities of an LLM you are already familiar with.

We’ll provide a guide here on how to use the weather MCP in a programmatic way using the OpenAI Responses API. When we’re done, you’ll have the confidence to build your own systems after having learned the following concepts:

How to connect OpenAI to the Xweather MCP server in a programmatic way.

Discover and use available MCP tools for weather data.

Let an agent reason over live forecasts and alerts

By the end, you’ll have a weather-aware agent that uses real, verified data through a standard protocol and handles it reliably in a development setting.

Why AI agents need live weather data

To reiterate, most AI agents today operate on static knowledge. Their outputs are only as fresh as the data they were trained or tuned on, which means that they’re poorly suited to handle dynamic information. Weather data is the perfect example of this gap. It changes hourly, is highly localized, and can directly impact safety and business operations.

Let’s look at some examples of how a model works without MCP:

AI assistants can hallucinate weather: Newer models without web search access will sometimes route a user to a trusted weather website when asked about the weather. However, older models have been shown to hallucinate answers when asked about weather information. If an old model were asked, “What’s the weather condition in Manchester right now?” you may get a confident but outdated or outright fabricated answer. Since there is no real-time integration, it relies on guesses from past data. MCP ensures that there is no guessing involved, regardless of the model.

Routing models shouldn't rely on stale information: A logistics agent may suggest the fastest route between two cities without knowing that heavy rainfall has closed key roads. Without live data, their output was less reliable than a basic map service. The Xweather MCP, combined with a mapping solution such as Mapbox MCP, can solve this problem.

These examples reflect a key point in that models don’t respond to constantly changing weather conditions independently. If you built your own model, you could decide to retrain it. However, retraining doesn’t give it awareness of current conditions. The Xweather’s MCP server is the bridge to this approach to weather data, giving agents a structured way to access forecasts, alerts, and historical records through a single interface. Let’s take a closer look.

About the Xweather MCP server

The Xweather MCP server connects LLMs directly to Xweather’s data platform. You can easily connect it directly to ChatGPT or Claude and use those hosted tools to integrate the server in minutes. See our Agents integration guide for a rundown on that, and to explore all of the available tools the server offers. If you would like to go on a deeper dive and explore a code-driven approach to using the server, such that you can use it to build your own systems or logic off of, this guide is for you.

The server is built around this core idea: Turn weather questions into weather answers. Models return weather insights through natural language queries, while the server manages the orchestration of choosing which API calls to make behind the scenes and what input parameters and values should be used. You can query past conditions, current observations, or forecasts through a single interface, without needing to know which endpoint to call. Just ask a question! You can use the added power of an LLM to generate reports, dashboards, graphics, etc, from the answers as well.

How to connect the Xweather’s MCP server using OpenAI Responses API

We’ll create a minimal weather AI assistant that uses the Xweather MCP server as a live data source and connect it. We will be using the OpenAI Responses API to do so, as it is lightweight and doesn’t need all of the fancy tooling that comes along with the Agents SDK, but still supports things like state management for conversations, and streaming for returning data in chunks as it’s being generated by the server.

Prerequisites

To follow along with this project, ensure you have the following:

An OpenAI API key available from your OpenAI dashboard

Xweather API credentials (Client ID and Secret), which you can obtain by signing up for a developer account trial via https://signup.xweather.com/developer

Docker, or Python 3.9+ if not.

Let’s get started! Pull the code from Github and follow the instructions on the readme page to get started.

We can run the project with this command:

Try it with a quick prompt like:

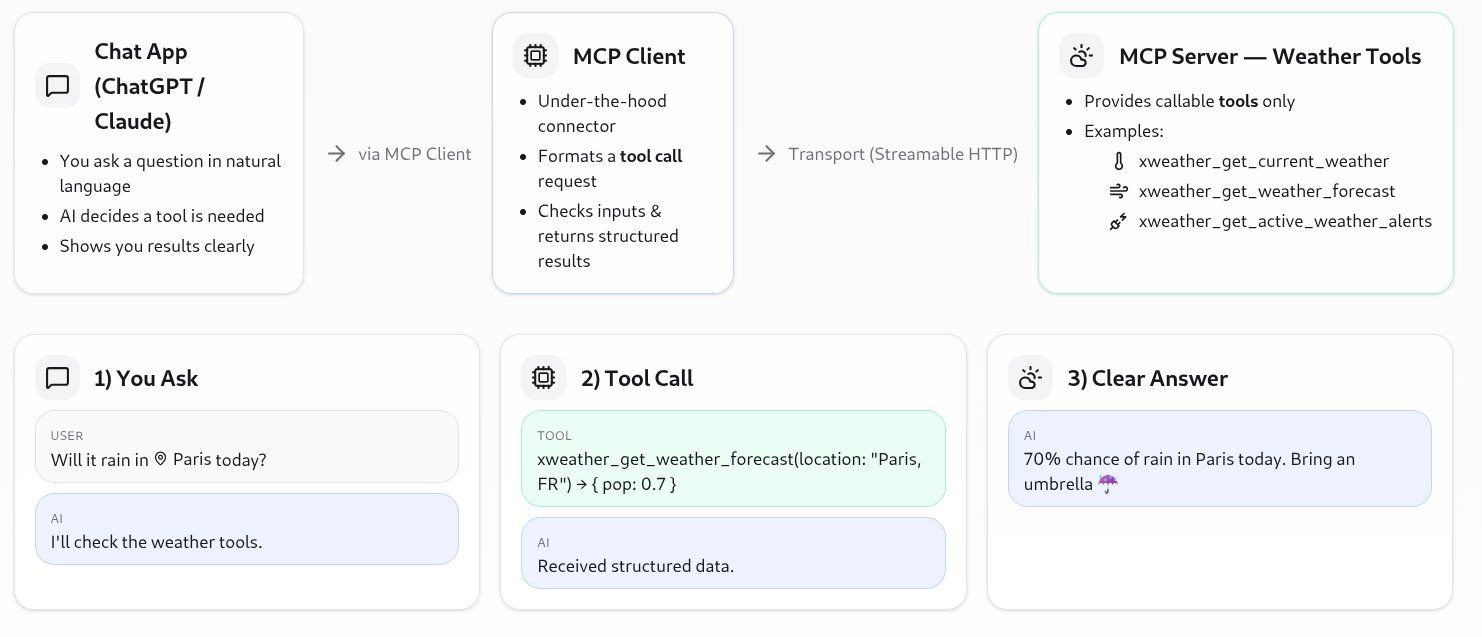

The underlying MCP client workflow looks like this:

Next, let’s see some practical ways this integration can be applied to build intelligent, weather-aware solutions across various domains.

Testing agentic decisions: Should we reroute?

So far, the interactive CLI shows us that the integration works with our tools. Now let’s see how the assistant could potentially use live data to make a real operational decision.

Try a sample question like this:

In this case, the assistant responded with:

What happened behind the scenes:

The LLM retrieved the weather forecast for Minneapolis for the next day using the tool xweather_get_forecast_weather, our own implementation of grabbing the forecast. This tool queries our internal API /forecasts endpoint with the correct time range.

Since we provided criteria (albeit vague) to compare against, it determined that 58 degrees Fahrenheit would not preclude deliveries and returned a clear recommendation.

This simple interaction shows how an agent can use the Xweather MCP to evaluate real conditions and return a clear operational recommendation. However, in a real scenario, we would want to define more stringent criteria depending on the use case. We could also use the power of other MCP servers, such as Mapbox, to combine routing information with weather. The same pattern applies across many other domains. Let’s look at a few practical ways developers can build on this foundation.

Diving into the code

No code would be useful without an explanation. So, how did we build this?

The most useful part is in src/weather_assistant.py, where we create a basic class for the assistant that instantiates the client object using our OpenAI API Key. We can keep track of past messages in the agent using the Conversations API. Now, the OpenAI documentation is kind of all over the place, so we will try to reference all the resources we used.

We implemented a simple ask function that takes in the user question and uses the MCP server configuration along with the conversation ID. The stream is the context manager, and there are many different types of events that can be spat out by the stream as it progresses along the request. We used this piece of documentation to implement the context manager flow, and this piece to determine all of the different types of events that could be generated. Beyond that, it took a bit of trial and error (yay, debugging!) to determine which parts were responsible for what we wanted to display. In the end, that was ResponseTextDeltaEvent for the live server-generated response chunks and ResponseOutputItemAddedEvent for determining MCP tool listing/calls. If you wanted to debug yourself, you could add something like

The other part here, src/interactive.py, is just a little interactive loop for us to ask questions via the CLI interface. One neat part is that we can chain questions, and the server will remember our history and be able to use it in context, so we can ask follow-up questions like, "And what about the air quality?"

Practical applications

Xweather’s short-term forecasts are more accurate than public sources, which makes the MCP server extremely valuable. The table below shows practical ways to apply the MCP server across different business domains.

| Use case | What the agent does | Query examples |

|---|---|---|

| Everyday personal use | Answer weather and routing questions with live accuracy | “Is it safe to drive through Denver tonight?” |

| Operational systems management | Answer routes and logistics plans dynamically | “If it starts snowing in Chicago, reroute I-90 trucks south.” |

| Safety systems management | Trigger alerts based on severe weather thresholds | “Warn field teams when lightning is detected nearby.” |

| Analytics & research | Enrich ML models with accurate, timestamped weather context | “Correlate temperature swings with power grid load.” |

The MCP server can turn accurate, real-time weather data into meaningful solutions. It gives developers the flexibility to build intelligent tools, enhance operations, and support research in ways that respond to the changing world.

Final thoughts

We’ve explored how Xweather’s MCP server acts as a reliable bridge between AI agents and live weather data, and we also dove deeply to code a client ourselves using the OpenAI responses API.Backed by the power of the raw API, the MCP server allows weather questions to be transformed into weather answers. How will you use it?

You can check out all of its capabilities by creating an Xweather developer account and setting up the MCP server in your own projects.